Building a Private "Central Intelligence Unit": How One Organisation Modernised Research Without Exposing Its Data

There's a moment every research-heavy organisation hits.

A question comes in from leadership. The team knows the answer is in the documents—somewhere. Internal reports, archived emails, support tickets, product specs, PDFs in a shared drive. Everyone stops what they're doing to search, synthesise, summarise, and validate. Hours go by. The answer eventually emerges—but slowly, manually, and often inconsistently.

In late 2023, one of our clients was living this moment daily. They wanted to speed up research, reduce manual work, and empower teams to make decisions faster. But there was a catch: the work involved highly sensitive internal data that could not be sent to external AI tools. GDPR compliance wasn't a checkbox—it was a survival requirement.

So the question became: How do you get the intelligence and efficiency of modern AI—without shipping your data to someone else's servers?

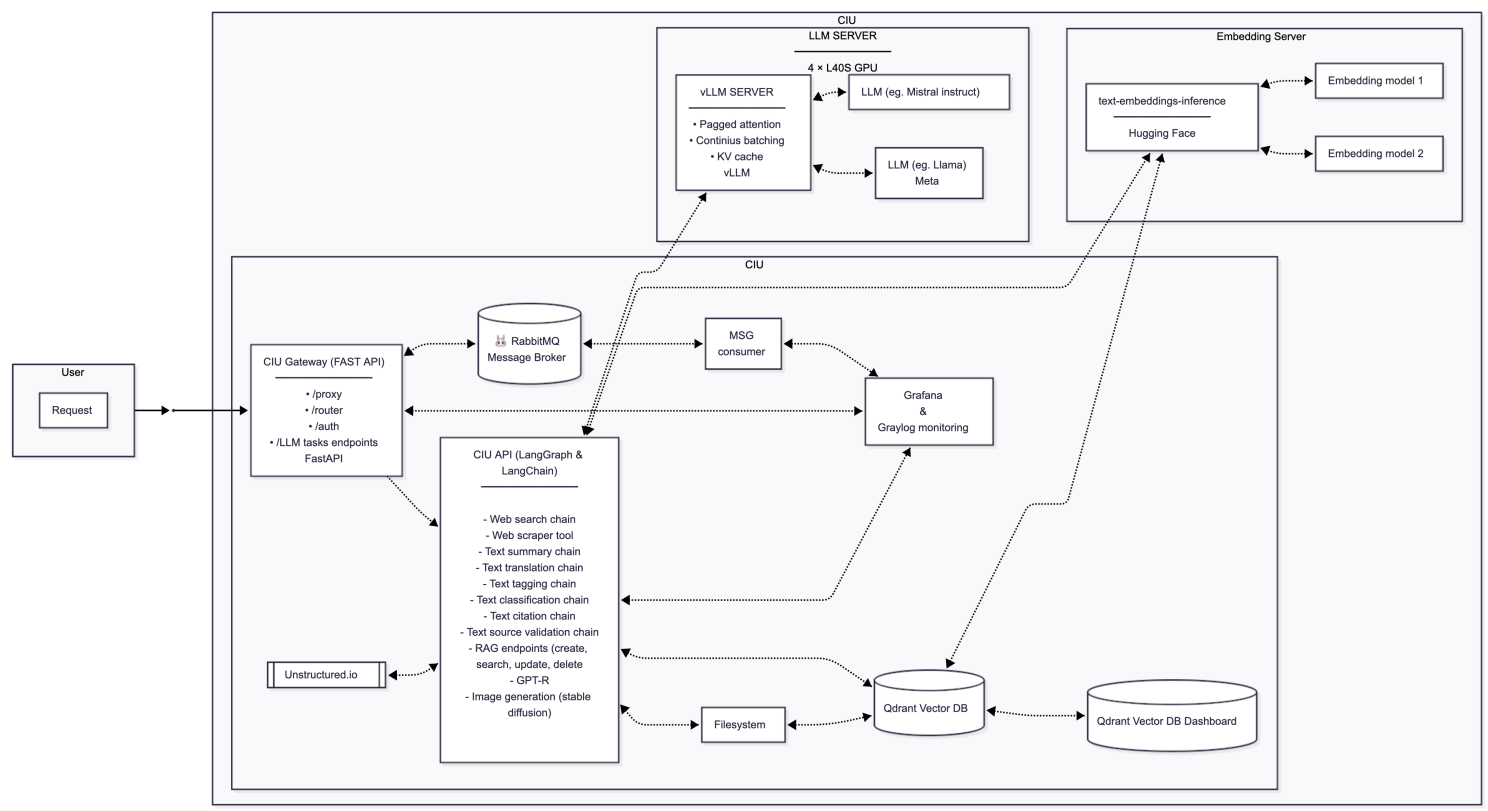

Our answer was to build a private, modular Central Intelligence Unit (CIU): a secure, in-house AI system that acts as a research assistant, analyst, and summariser—running entirely inside the client's controlled environment.

The Central Intelligence Unit architecture showing private LLM and embedding server within the client's secure infrastructure.

The Problem: Cloud LLMs are a Data Privacy Liability

Most AI prototypes start by calling APIs like GPT-4 or Gemini. It's fast, but it creates an unacceptable data exposure problem for regulated industries.

The Technical Dilemma: The most valuable data for any RAG system is proprietary—financial reports, internal support tickets, legal memos. If you send this data to an external provider for embedding or generation, you've lost full control over it.

The Business Impact: For our client operating in the EU, this wasn't an engineering preference; it was a foundational risk. A single breach of sensitive data could invalidate their operations.

Our approach pivoted immediately: the Central Intelligence Unit (CIU) couldn't be a consumer of external AI; it had to be a private AI ecosystem built for internal consumption.

Engineering the Fortress: Private LLMs and Modular RAG

The core of the solution was building a GDPR-compliant by design architecture. This required us to host two critical components in the client's private cloud: the Embedding Server and the Generation LLM.

1. Decoupling Security and Scale

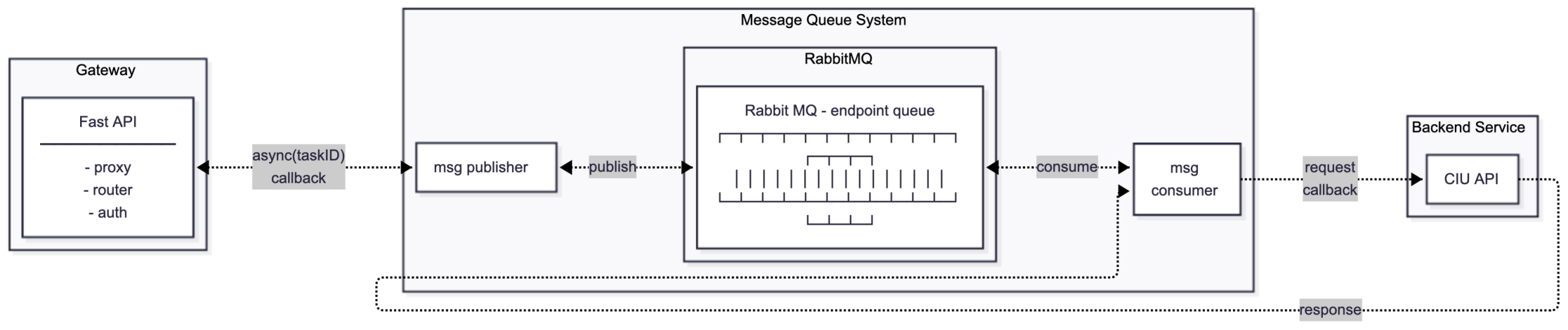

We built the core on a modular, containerized stack (Python, FastAPI, Docker) with RabbitMQ for asynchronous processing. This allowed long-running, resource-intensive tasks—like complex document analysis—to be managed without blocking the user interface.

For the LLM itself, we couldn't just use a small, private model. The research demanded state-of-the-art capability.

The Solution: We deployed powerful, optimized models, like Llama-3.1-Nemotron-70B, on a private GPU cluster (4x L40s) using vLLM for high-throughput serving.

This move was foundational. It meant that confidential data—from the user's initial query to the entire uploaded document—never left the client's secure infrastructure. Data security was not an afterthought; it was the architecture's central feature.

Asynchronous processing architecture using RabbitMQ for handling resource-intensive document analysis tasks.

2. Advanced RAG as an Accuracy Lever

Privacy alone isn't enough; the answers must be useful. We evolved the RAG pipeline beyond simple vector search using Qdrant.

The Workflow: To maximize retrieval precision, we implemented sophisticated techniques: hybrid search, advanced reranking, semantic chunking, and the ability to pull information from diverse sources (internal knowledge bases, live web search, and Zendesk tickets).

This wasn't a one-size-fits-all lookup. The system dynamically pulls context from up to four different source types per query, using the generated context to provide a single, factually grounded answer.

Orchestration and Trust: The Power of the Feedback Loop

To handle the complexity of multi-source retrieval and conditional logic, we chose LangGraph as our agent orchestration layer.

LangGraph acted as a dynamic router, not a static chain. It evaluates the user's intent, decides which sources to query (in parallel), handles fallbacks if a source fails, and then generates the final response. This stateful flow control ensured a predictable, scalable query lifecycle.

But a powerful tool is useless without trust. We closed the loop with a robust Observability and Evaluation strategy:

- Hallucination Detection: We deployed a private model (vectara/hallucination_evaluation_model) to constantly assess the factual accuracy of the CIU's outputs.

- System Validation: We used the RAGAS framework to systematically test the pipeline against real and synthetic data, validating the effectiveness of our retrieval strategies.

- Tracing: Langfuse was integrated across all service endpoints. This allowed engineers to trace the entire lifecycle of a complex query—from initial intent parsing through multiple retrieval steps and final generation—critical for debugging latency and accuracy issues in production.

This multi-layered approach turned the CIU from a black box into a transparent, auditable system, which is essential for both engineering confidence and executive buy-in.

The End Result: Competitive Advantage Through Compliance

By late 2024, the CIU had achieved its goals: research tasks that once took hours were completed in minutes, boosting the velocity of high-value teams.

But the lasting insight is this: Privacy is the new performance differentiator in AI.

We didn't just build a smart tool; we created a secure environment where the client could safely leverage their most strategic asset—their proprietary data—without fear of external exposure. This allowed them to move faster than their competitors who were still stuck in endless legal reviews over third-party API usage.

"Building a Central Intelligence Unit isn't about code; it's about architecting trust. The most successful AI products aren't just the most accurate—they're the ones designed to de-risk the business and deliver value within the guardrails of the real world."

*This case study showcases a 2024 project for an EU-based client. Specific client details have been anonymized to protect confidentiality.

Related Articles

Read all ArticlesBeyond GenAI: Computer Vision & ML to Optimize Flow, Staffing & Space—for Measurable ROI

Understanding how people move through crowded spaces is critical. We ran a POC to test whether we could automatically detect, track, and visualize human movement across multiple camera feeds for measurable ROI.

AI Chat's Two Real Problems: the Blank Prompt and "I Don't Know"

What happens after launch? How to design a learning ecosystem that turns a static AI tool into a system that improves with use.