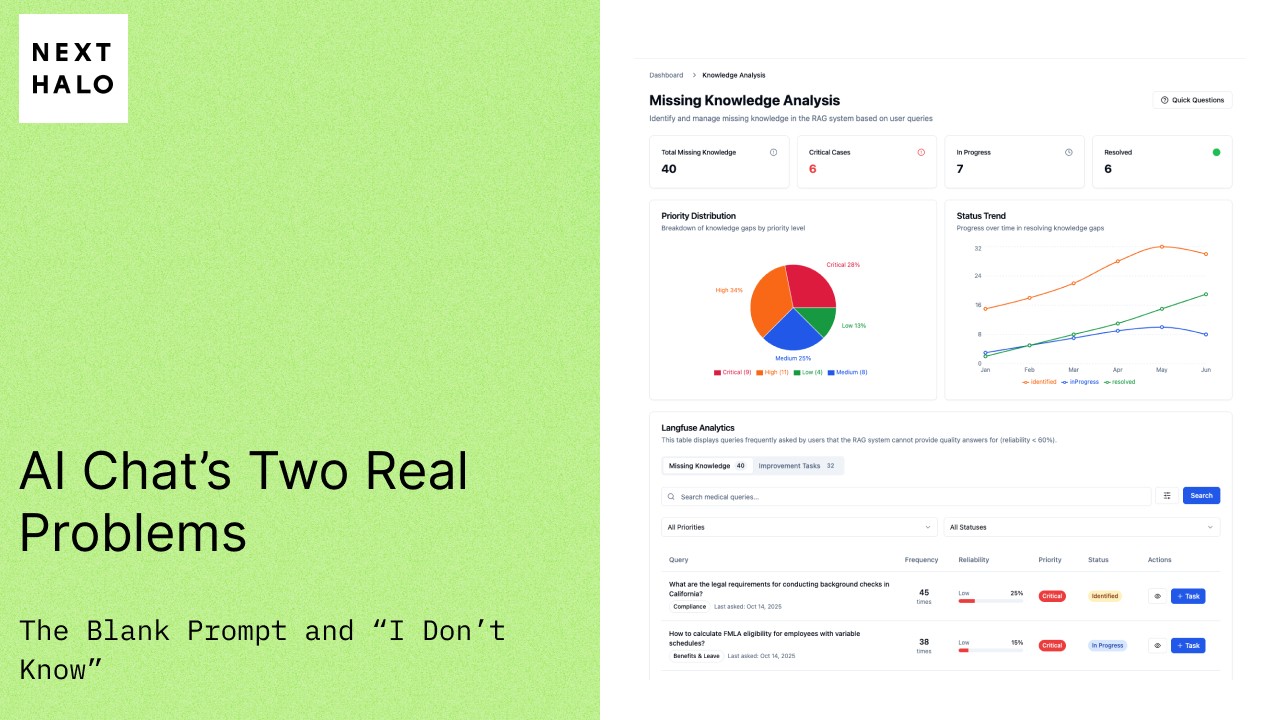

AI Chat's Two Real Problems: the Blank Prompt and "I Don't Know"

When a client asks for an enterprise RAG system, Day 1 is about making it work end-to-end: ingest and normalize the data, chunk and embed it, connect the retriever and model, and run it behind FastAPI/LangGraph—with user and admin UIs in React. Solid, observable, and maintainable—the baseline, not the finish line.

Our obsession is the Day 100 problem.

What happens after launch? What stops the system from becoming a "ghost town" app that users ignore? And how does it handle new information without a team of developers manually updating it?

The real challenge isn't building an AI; it's designing a learning ecosystem. We focused on two, interconnected loops to solve the two biggest hurdles to adoption.

Loop 1: Solving the "Blank Page" Problem

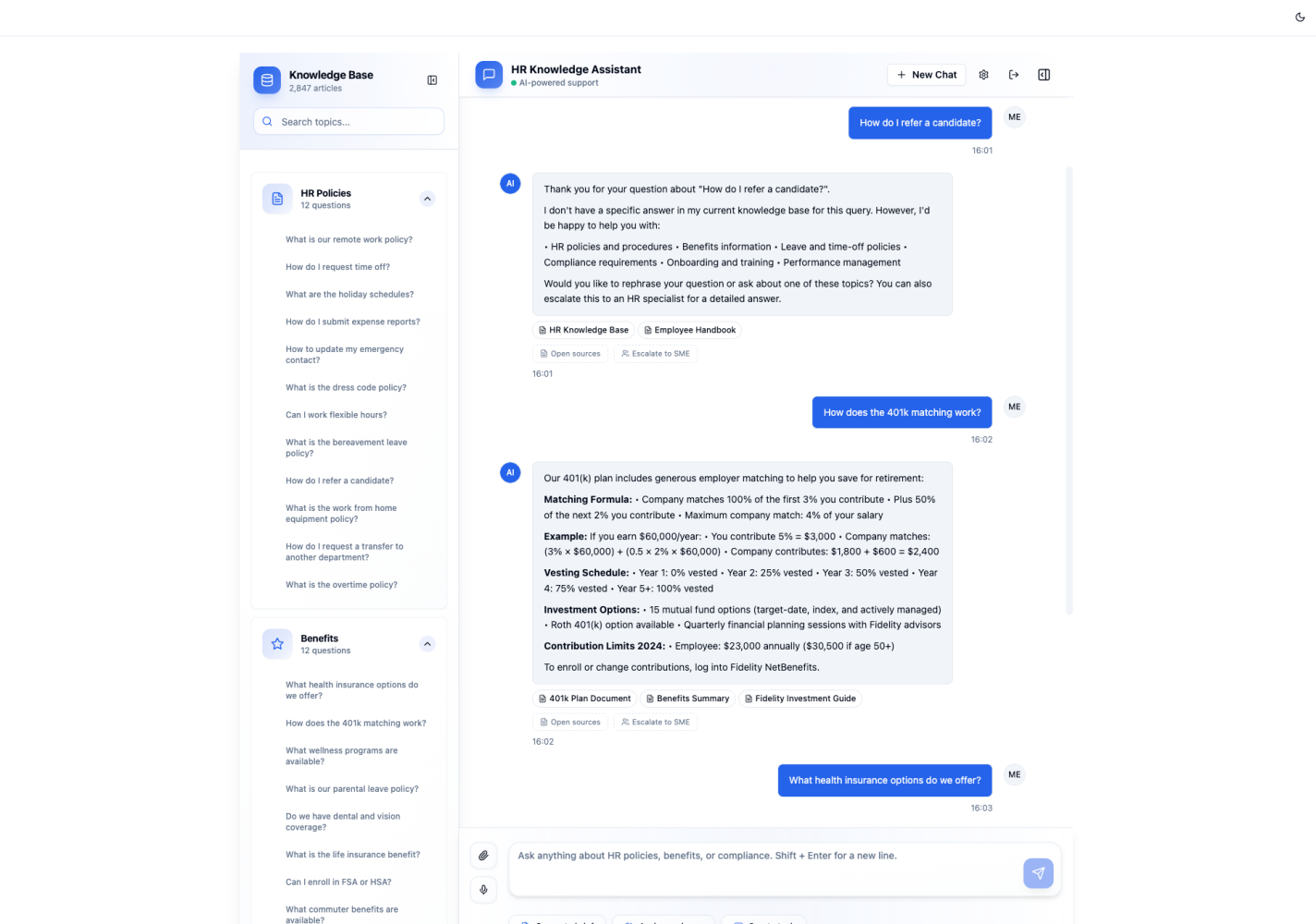

A new user opens a chatbot and sees a blank text box. They don't know what to ask, how to phrase it, or what the system even knows. This friction is where adoption fails.

Instead of an empty prompt, we greet the user with a dynamic, curated list of clickable questions.

- "What is our remote work policy?"

- "How do I request a transfer to another department?"

- ...

These aren't hardcoded. They are managed by domain experts from a simple back-office portal. When a new, important document is added to the knowledge base, the expert can add a corresponding example question in minutes.

This small feature has a massive impact. It transforms the first-run experience from a guessing game into a guided tour, immediately proving value and showing what it knows and how to ask.

Loop 1 - Start smarter: domain experts publish clickable starter questions so users see what the system knows and how to ask.

Loop 2: Turning "I Don't Know" into Intelligence

No knowledge base is perfect. The most critical moment in an AI's life is when it's asked a question it can't answer.

For most systems, this is a failure. For us, it's the single most valuable piece of data you can get.

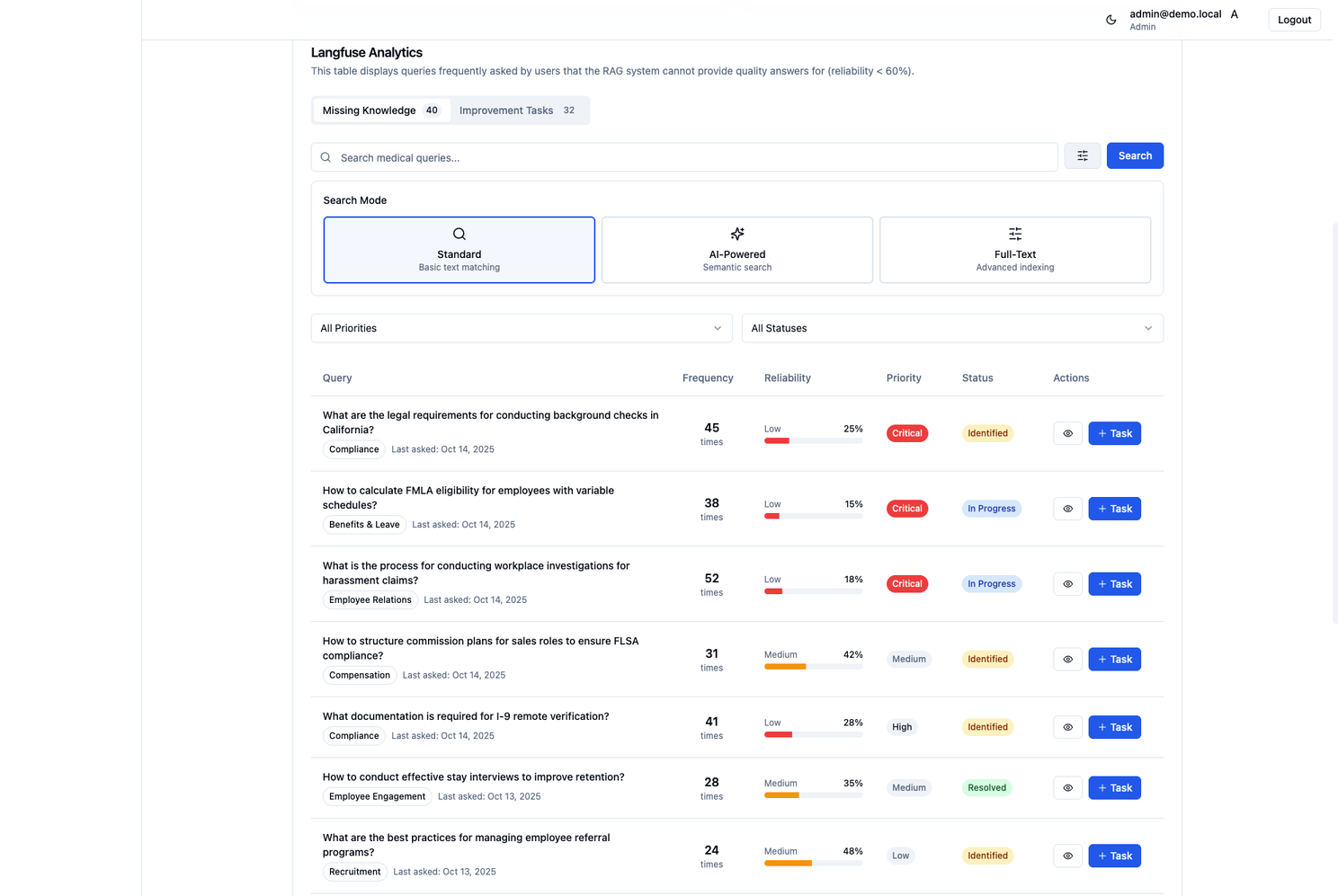

We needed a way to capture, cluster, and act on these knowledge gaps. This is more than just logging; it's building a business intelligence engine. We chose Langfuse as the observability backbone for three specific, non-negotiable reasons:

- Self-Hostable: It's open-source and can be self-hosted, which was a hard requirement for our client's data residency and strict compliance needs.

- Framework-Agnostic: It worked seamlessly with our custom stack, giving us architectural freedom without vendor lock-in.

- API-First: This was the key. It allowed us to build our custom intelligence layer on top of its data.

Here is the feedback loop we built:

- A user asks a question. The RAG system finds no relevant information and responds, "I don't have enough information to answer that."

- Langfuse immediately traces and flags this event.

- Our custom admin dashboard ingests these traces and automatically clusters the unanswered questions by topic and frequency.

The client's domain experts don't have to guess what's missing. They log in and see a prioritised, data-driven work list.

They can see, "37 people asked about parental leave and overtime rules."

Right next to that insight is a button: "Add to Knowledge Base."

Loop 2: "I don't know" becomes a prioritized backlog—clustered by topic & frequency.

The expert uploads the definitive document. The system indexes it. The gap is closed. The next user who asks gets a perfect, authoritative answer. The entire loop happens without a single line of code from a developer.

A System That Teaches Itself What to Learn

This is where the two loops connect.

The onboarding questions (Loop 1) get users asking high-quality questions. The analysis of unanswered questions (Loop 2) identifies the most critical knowledge gaps. The insights from Loop 2 are then used to update the onboarding questions in Loop 1, showcasing the system's new capabilities.

Progress compounds with every step.

This architecture turns a static tool into a system that improves with use, grounded in your experts and your data. AI scales access—not authority—by building the fastest bridge from user questions to expert answers.

"The Day 1 problem is about code. The Day 100 problem is about creating systems that listen, learn, and empower people."

After two decades in this field, we know which one delivers lasting value.

*All UI shown is a mockup for illustrative purposes only and does not reflect the client's actual data or deployment. The platform is currently in production and used in regulated environments under strict NDA.

Related Articles

Read all ArticlesBeyond GenAI: Computer Vision & ML to Optimize Flow, Staffing & Space—for Measurable ROI

Understanding how people move through crowded spaces is critical. We ran a POC to test whether we could automatically detect, track, and visualize human movement across multiple camera feeds for measurable ROI.

Building a Private "Central Intelligence Unit": How One Organisation Modernised Research Without Exposing Its Data

How to get the intelligence and efficiency of modern AI without shipping your data to someone else's servers. A case study in building a secure, GDPR-compliant AI system.