AI Platform Provider

Enterprise AI / PaaS

2023 - 2024

How do you build and launch a competitive, commercial AI platform for the enterprise market? The primary challenge was not just functionality, but trust. Our client needed to engineer the core intelligence engine for their new platform, which had to be "Secure by Design" and fully GDPR-compliant from day one. This meant ensuring that sensitive enterprise data from tools like Jira, Confluence, or Zendesk would never be exposed to third-party APIs.

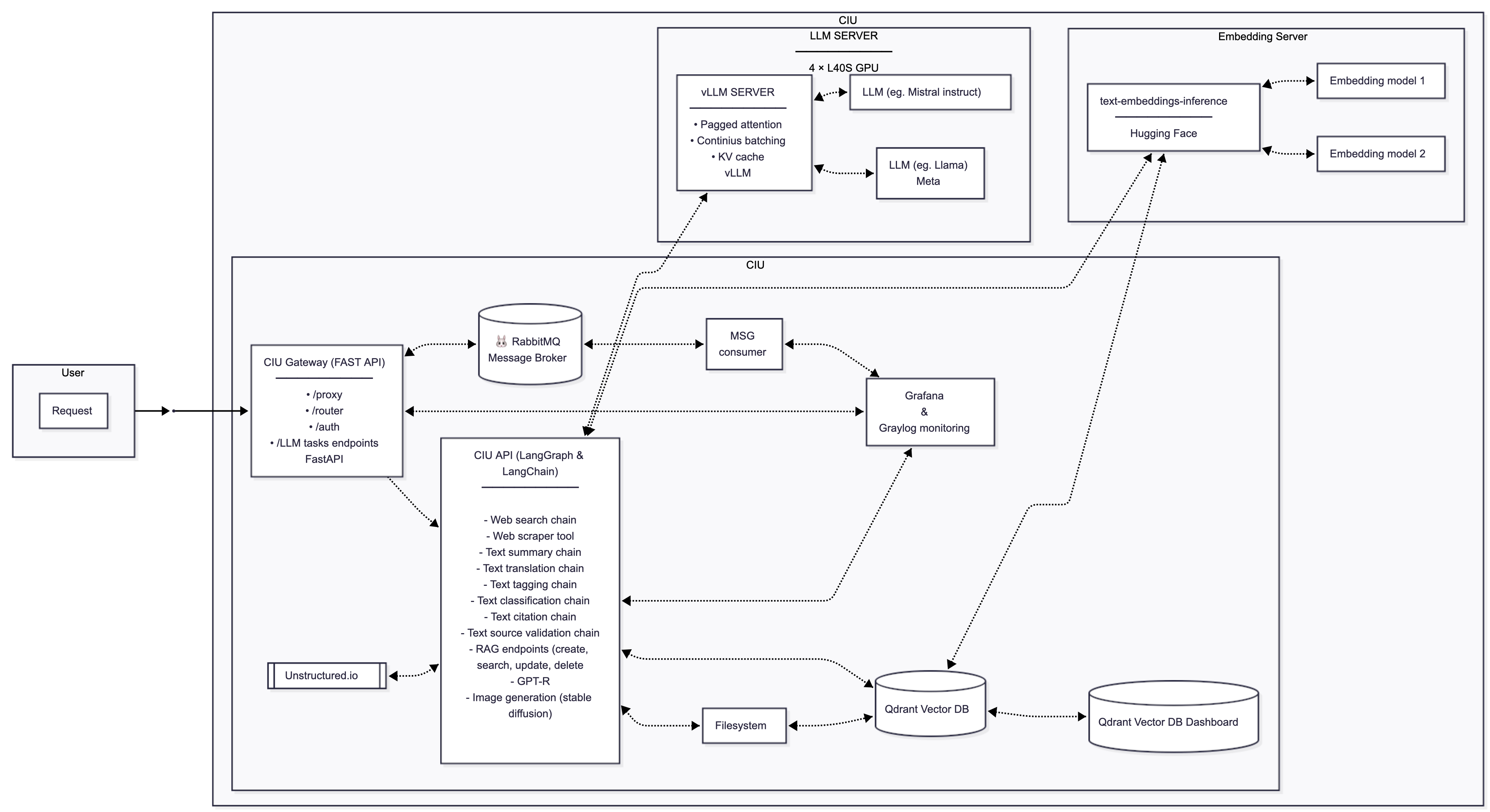

We architected and delivered the Central Intelligence Unit (CIU), the production-ready, scalable, and secure core engine for their commercial platform. This modular AI co-pilot integrates seamlessly with existing enterprise tools and operates within a 100% private, secure environment. By engineering a sophisticated RAG pipeline with privately hosted LLMs and embedding models, we provided a "Secure by Design" foundation that guarantees zero data exposure and full data sovereignty for their enterprise customers.

At the end of 2023, our client, an emerging AI platform provider, had a critical business objective: to architect and develop the core engine for their new, commercial AI platform. The key challenge was not just to innovate, but to build a product that was "Secure by Design" from the ground up, guaranteeing enterprise-grade data privacy. This was essential for their go-to-market strategy, as the solution needed to be fully compliant with stringent regulations like GDPR to attract enterprise clients in the EU.

Our team began the project with in-depth consulting. Instead of offering a one-size-fits-all product, we dedicated ourselves to understanding the client's commercial objectives, technological constraints, and strategic opportunities. Based on this analysis, we designed, engineered, and deployed the Central Intelligence Unit (CIU) – the scalable and secure foundation for their new commercial product.

The CIU functions as a central "brain" or AI co-pilot that integrates with existing enterprise tools (like Confluence, Jira, Slack, and Zendesk). Users can ask a question or upload a document, and the system autonomously finds, processes, and summarizes the most relevant information to provide an accurate answer. A key advantage is that the entire system operates within the client's private and secure environment, safeguarding confidential data.

By late 2024, the CIU was a production-ready, scalable, and secure engine, powering the client's successful platform launch. It delivers measurable value to their end-users:

This project showcases our ability to guide clients through the complex world of AI, from initial concept to the deployment of an advanced, production-grade commercial engine that provides our client with a powerful, secure, and commercially viable product.

The project's technical objective, initiated in late 2023, was to create the centralized, "AI-native" core engine for a commercial platform, capable of autonomous data gathering, interpretation, and action. Key challenges included handling sensitive data securely, building a scalable architecture for Large Language Models (LLMs), reducing reliance on external APIs, and ensuring the entire ecosystem was designed to be "Secure by Design" and GDPR-compliant from the ground up.

From its inception, the system was designed as a modular, containerized ecosystem.

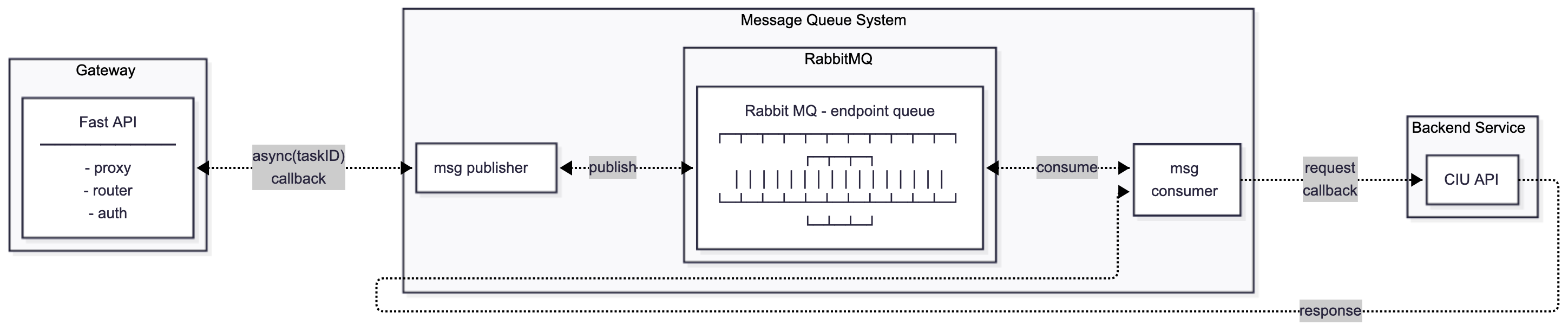

Core Stack: The system's backbone is Python, with FastAPI serving lightweight, LangChain and LangGraph-powered endpoints for specific tasks like classification and summarization. The entire infrastructure is containerized using Docker.

Gateway and Routing: We implemented Traefik as a reverse proxy to manage routing and security under a single gateway. This involved configuring Host rules for each new service, securing endpoints with HTTPS via Letsencrypt certificates, and implementing basic authentication where necessary.

Asynchronous Processing: To coordinate tasks between microservices and ensure scalability, we implemented RabbitMQ as a robust message broker. This was essential for efficiently managing long-running, resource-intensive processes like web scraping or complex document analysis.

The CIU rapidly evolved from simple API calls to external LLMs (like OpenAI's GPT-3.5 and GPT-4 for early chains such as chat_on_report) to a sophisticated RAG pattern that delivers contextually relevant and factually grounded answers.

Vector Database: We chose Qdrant as our vector database for storing and querying text embeddings. Its advanced filtering capabilities were leveraged to enhance retrieval precision across different RAG strategies.

Private LLM & Embedding Servers: To ensure data privacy and optimize costs, we built a dedicated Embedding Server, initially using Hugging Face models and the HuggingFaceInferenceAPIEmbeddings library. We also hosted advanced LLMs, such as neuralmagic/Llama-3.1-Nemotron-70B-Instruct-HF-FP8-dynamic, on a private GPU cluster (4x L40s) using vLLM for high-throughput serving. This move was critical not only for flexibility and security but was a foundational requirement for the product's "Secure by Design" architecture and its GDPR compliance promise to customers. By hosting both the embedding and generation models in-house, we ensured that no sensitive user data—from query contents to entire uploaded documents—ever left the client's secure infrastructure.

Advanced RAG Techniques: To maximize retrieval accuracy, we implemented a suite of sophisticated techniques, including hybrid search algorithms, reranking mechanisms, Contextual Chunk Headers, Semantic Chunking, and Document Augmentation.

Diverse Data Sources: The RAG system was built to retrieve information from multiple sources, forming the basis for the platform's commercial "connectors." This included an internal knowledge base in Qdrant, live web search results, and enterprise tools like Zendesk support documents.

For managing complex, multi-step workflows, we integrated LangGraph, an advanced orchestration framework for agent-level flow control. LangGraph functions as a dynamic router, evaluating each user request and directing it to the most appropriate processing chain. Its stateful graph structure managed the entire query lifecycle, including:

LangGraph's modular structure of nodes and conditional edges provided superior scalability and traceability compared to basic LangChain chains, making it our default choice for easily scaling the platform with new enterprise connectors and agent-based tasks.

Trust in an AI system is paramount. We implemented a multi-layered approach to evaluation and monitoring.

Hallucination Detection: We deployed the vectara/hallucination_evaluation_model on our private GPU cluster to assess the factual accuracy of generated outputs, thereby boosting user trust.

System Evaluation: We used the RAGAS framework for thorough system evaluation, testing our RAG pipeline with both real-world and synthetic datasets to validate its effectiveness and ensure production-ready performance.

Observability: We established comprehensive system monitoring. Langfuse was integrated for detailed tracing of all LangServe chain and LangGraph endpoints. This involved an upgrade from Langfuse v2 to v3, which required adding new Docker services like Minio (an S3-compatible object storage) and integrating them securely via Traefik. Tracing was attached using callback handlers at the top of the chain to monitor the entire process. Finally, Grafana and Graylog provided logging and analytics dashboards for fine-grained performance tracking.

By late 2024, the CIU had matured into a fully autonomous, scalable, and secure core engine for a commercial AI platform. The project demonstrates our studio's expertise in architecting and delivering complex, AI-First systems that are not only powerful and scalable but also secure, private, and compliant with regulations like GDPR. By successfully integrating advanced RAG, private LLM hosting, dynamic workflow orchestration with LangGraph, and a robust evaluation and observability pipeline, we delivered a production-grade solution that provides the client with a significant competitive advantage for their commercial product.

We're currently working on exciting new case studies to share with you.

Monday - Friday: 9:00 AM - 6:00 PM

Saturday - Sunday: Closed